MCP: The Quiet Revolution Behind Scalable AI Tool Integrations

Everyone’s building with LLMs. But how do you connect them to real tools—efficiently?

That’s where MCP (Model Context Protocol) quietly steps in—and might just change how we integrate AI forever.

What Is MCP?

MCP (Model Context Protocol) is a lightweight standard for connecting AI models to external tools via structured JSON and consistent HTTP endpoints.

At first glance, it might seem simple:

A schema. A spec. A few conventions.

But the impact is huge.

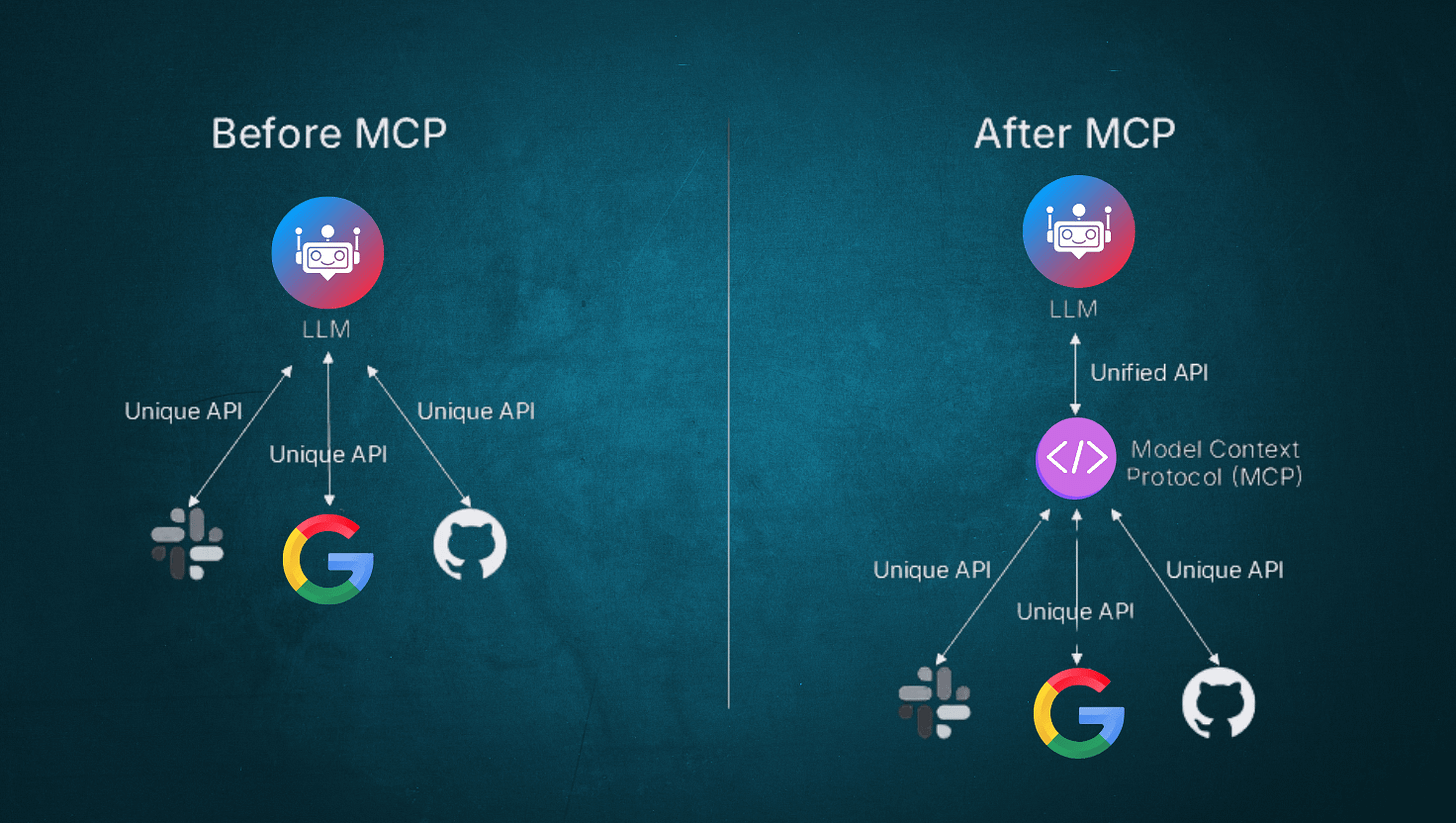

Before MCP, every AI platform or agent had its own integration format. Connecting an AI model to an app or database required a custom integration. Multiply that across apps and tools, and you get M × N integrations — costly and brittle.

MCP changes the game.

The Old Way vs The MCP Way

Before MCP:

Each tool needed a different wrapper for each AI model.

Every new app = new integration work.

Engineering time was wasted maintaining adapters.

No shared interface or protocol across tools.

After MCP:

Tools expose a standard MCP-compatible endpoint (just a structured JSON API).

Any MCP-speaking model or agent can interact with that tool—no custom glue code.

Total integrations = M + N, not M × N.

A single spec to rule them all.

In other words:

Write once, integrate anywhere.

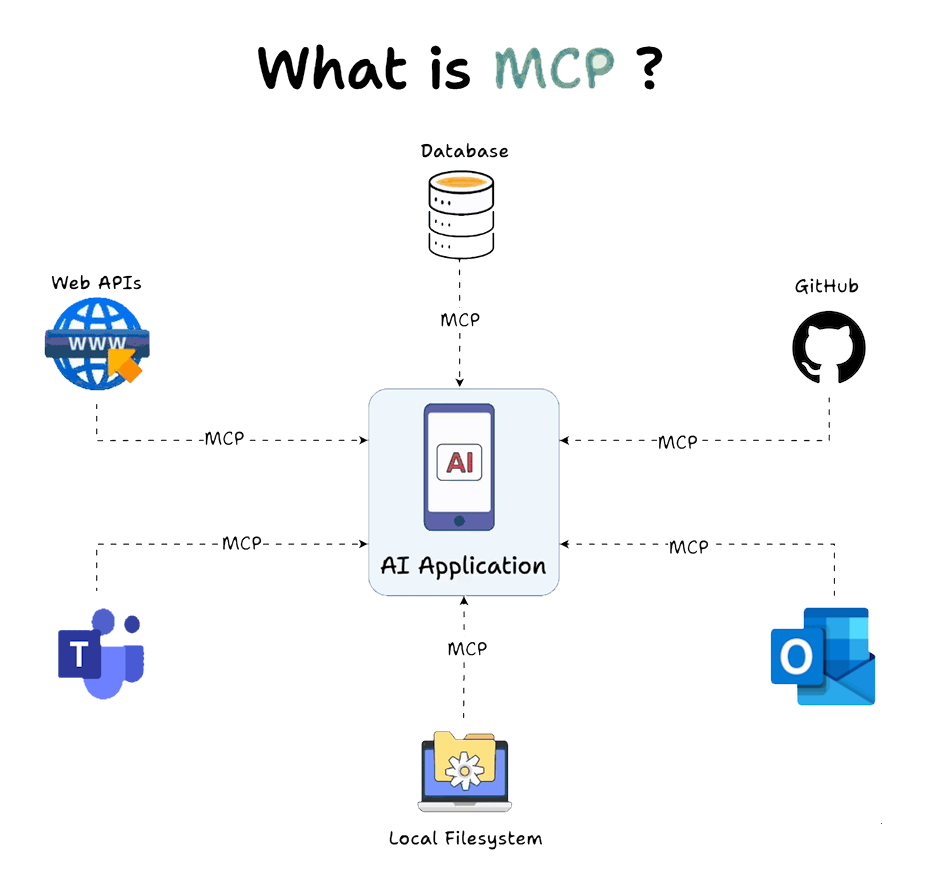

Why MCP Matters in the AI Ecosystem

LLMs like GPT, Claude, and open models are becoming smarter and more agentic. But smart agents need tools: to fetch data, make changes, trigger workflows, and interact with the world.

Without a shared language, every agent talks differently.

MCP gives us that language.

It’s like REST for AI agents. Or GraphQL for tools. A small standard with massive leverage.

Imagine:

You build a customer support tool.

Expose it via MCP.

Now every AI agent—Claude, GPT, or open-source alternatives—can use it without custom code.

That’s interoperability.

That’s future-proof.

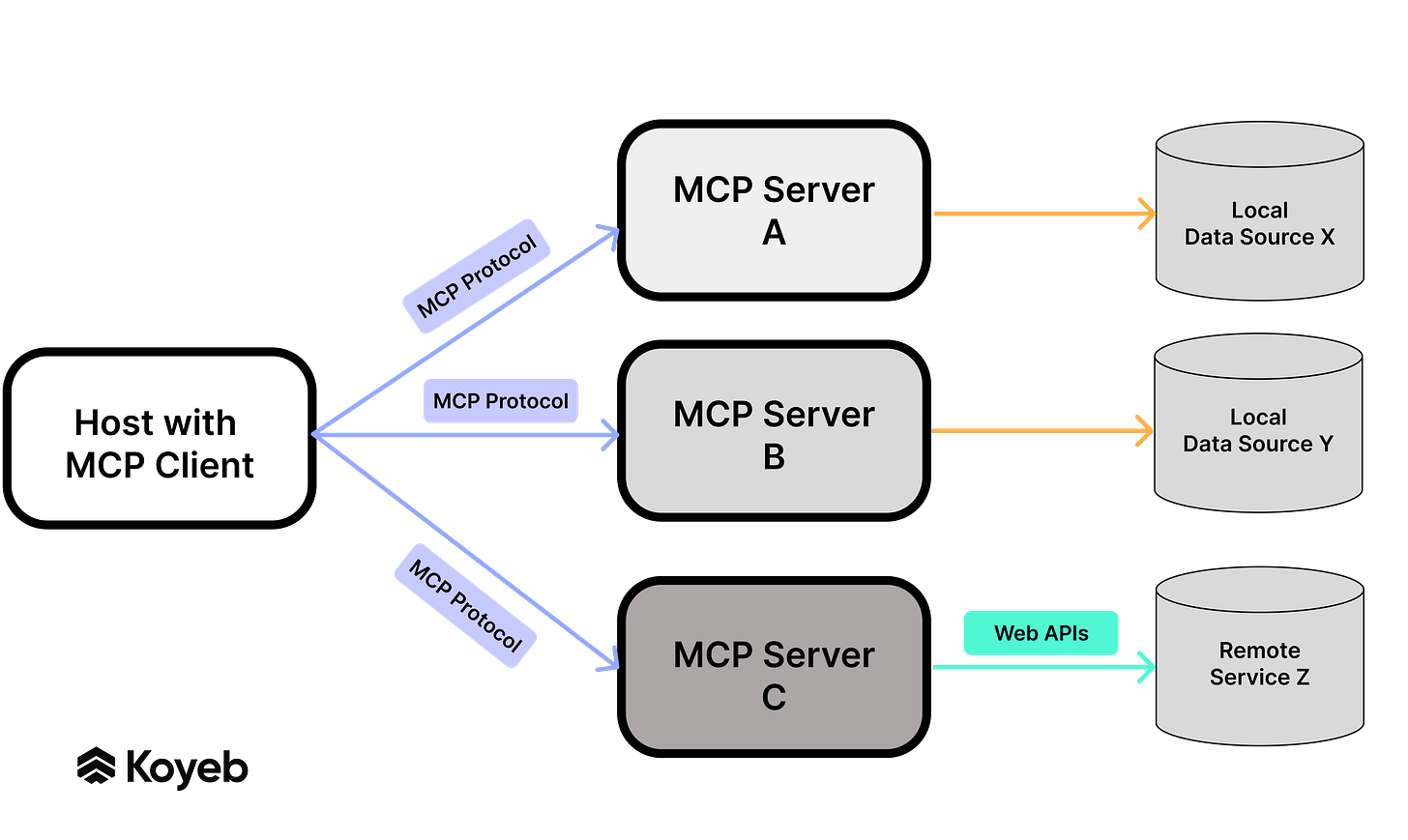

What Does an MCP Integration Look Like?

Simple. You define:

A JSON schema describing inputs and outputs

A few standardized endpoints (

/execute,/metadata, etc.)Clear function names and tool descriptions

If you can write a basic API in Flask, FastAPI, or Express.js, you can build an MCP server.

No SDKs. No magic. Just clarity and compatibility.

Real-World Benefits

Fast development — Integrate tools into multiple AI agents in hours, not weeks

Scalable infrastructure — Add new tools without rebuilding pipelines

Cross-platform support — Works with Anthropic Claude, OpenAI GPT, and others

Open standard — You’re not locked into one company’s ecosystem

Future-ready — As AI agents become the norm, MCP ensures your tools remain compatible

What’s Next for MCP?

More open-source tooling (SDKs, validators, test servers)

Widespread adoption by AI agents and orchestration platforms

Community-driven evolution of the spec

Native support from providers like OpenAI, Anthropic, HuggingFace, and others

Final Thoughts

MCP might seem like “just a spec” — but it’s a turning point.

It means AI tools no longer need to live in silos.

It means integrations scale without friction.

It means building AI products feels a lot more like building web apps — with standards, clarity, and speed.

In a world moving toward AI-native software, MCP is the connector we didn’t know we needed.

Thanks for reading this. Subscribe GrowTechie to get more updates like this.

Follow me on socials:

Twitter: https://x.com/techwith_ram

Instagram:https://www.instagram.com/techwith.ram/