Top 10 LLM & RAG Projects for Your AI Portfolio (2025–26)

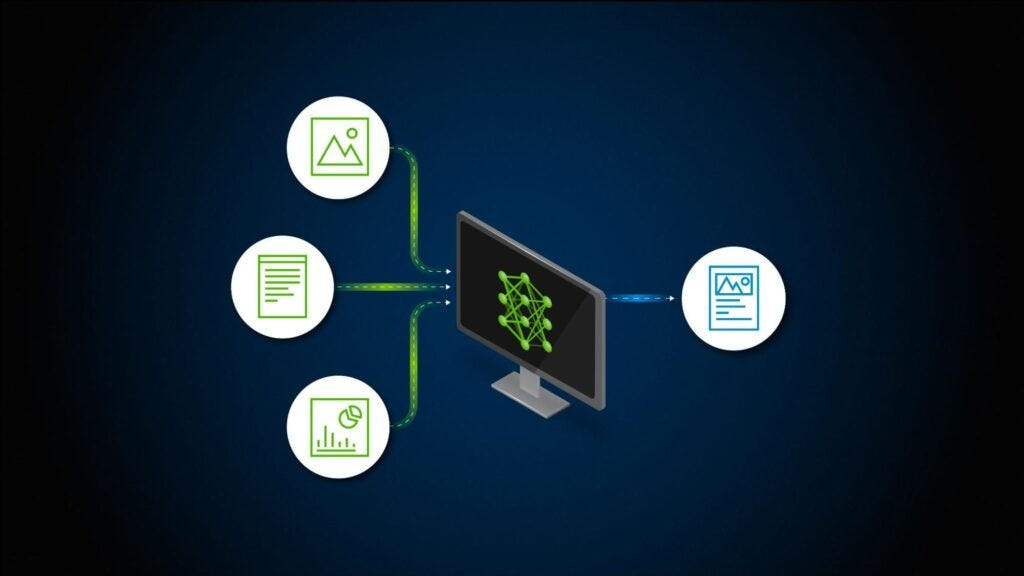

Retrieval-Augmented Generation (RAG) is like giving your AI a memory upgrade and a Google search bar. Instead of making up answers based on what it “thinks” it learned during training, your model can now fetch real-time, relevant info — basically, it stopped hallucinating and started citing sources.

Imagine ChatGPT but with access to your favorite bookmarks, PDFs, Slack threads, and weirdly named Google Docs you forgot existed. It’s like turning your AI into the friend who actually reads the group chat history before replying.

In practice, this means smarter, fresher, and far more context-aware responses. Think of an AI assistant that doesn’t just guess but double-checks its facts first (finally, some accountability in the relationship).

Below are 10 creative, beginner-friendly project ideas that combine LLMs with RAG — each with a memorable name, a pinch of purpose, and just the right amount of tech sauce.

So grab your favorite Python IDE (For me its VS code /Cursor), fire up your vector store, maybe even open up a Streamlit tab — and let your AI work overtime while your coffee gets cold.

Let’s dive in.

1. CodeWhisperer—Developer Documentation Chatbot

Tools & Technologies: PyPI (to load code/docs), LangChain or LlamaIndex (for document loaders and chains), FAISS or Chroma (vector store), GPT-4 / LLaMA-2 (LLM), and a simple front-end (Streamlit or a Slack bot).

Step-by-Step Design:

Collect docs: Scrape or download project documentation (e.g. Markdown files, API docs).

Preprocess: Split large files into ~500-token chunks and generate embeddings with an embedding model.

Index: Store all embeddings (with source pointers) in FAISS.

Query & Retrieve: When a user asks a code question, embed the query and find the top-matching doc chunks.

Generate: Pass those chunks plus the question to the LLM (via LangChain) to produce a clear answer or code snippet.

UI: Display the answer with highlights of source lines and allow follow-up queries.

Real-World Applications: Internal developer help desk (answering API questions), onboarding chatbots for coding projects, Slack or GitHub Copilot–style assistants. Bonus Upgrade Ideas: Add syntax-aware parsing so it can pull actual code examples, integrate with GitHub for live code lookup, or make a VS Code extension for in-IDE help.

2. LegalEagle—AI-Powered Contract Assistant

Need to know what that confusing contract clause really means? LegalEagle is a RAG chatbot for legal docs. It loads statutes, contracts, or case law and answers questions in plain English. By searching through real laws and rulings, it helps lawyers and paralegals find relevant information quickly. (RAG is great for law because it lets the AI search through case law and statutes rather than just.)

Tools & Technologies: Python (PyMuPDF or pdfplumber for PDFs), OpenAI/Anthropic LLM, Pinecone or Qdrant (vector DB), LangChain or Haystack, and a React or Streamlit front-end.

Step-by-Step Design Process:

Data Ingestion: Upload laws, regulations, or contracts (as PDFs or text).

Chunking & Embedding: Split into sections/paragraphs; create embeddings.

Indexing: Store embeddings in the vector DB with references to doc and page.

Semantic Search: On a query (e.g., “What are my rights under the privacy clause?”), find top matching chunks.

Answer Generation: Feed retrieved snippets to the LLM with a prompt like “Based on these excerpts, what does the contract say about X?”

UI & Interaction: Show the answer and the highlighted source text, and allow “ask follow-up” or download summaries.

Real-World Applications: Law firms or compliance teams searching internal policies, consumer legal Q&A chatbots, and contract review assistants.

Bonus Upgrade Ideas: Add filters by jurisdiction or date, support multiple languages (e.g., GDPR in English vs. original French), implement a feedback loop to refine answers, or integrate a knowledge graph for legal entities.

3. MediGuru — AI Medical Q&A Assistant

Imagine an AI that can quickly find medical advice from research papers (not a substitute for a doctor, but a very clever librarian for health info). MediGuru lets you ask questions like “What are the latest COPD treatments?” and it searches medical journals or guidelines to give an answer. Because healthcare knowledge changes fast, RAG is perfect here: the AI pulls fresh and relevant info from trusted source instead of outdated memory. It also tends to give more accurate, up-to-date answers when based on real data.

Tools & Technologies: LangChain (document loaders), Hugging Face/BioMed embedding model, a vector store (Chroma or Weaviate), OpenAI/GPT-4 or Claude (LLM), and a Streamlit or Flask interface.

Step-by-Step Design Process:

Gather Data: Pull abstracts/articles from PubMed, WHO, or hospital protocols.

Preprocess: Clean text, split into sections (e.g. “Diagnosis,” “Treatment”).

Embedding: Generate embeddings (BioBERT or OpenAI text-embedding).

Index: Store vectors in FAISS/Pinecone with doc links.

Query & Retrieve: User asks a medical question; system finds relevant passages.

Answering: LLM synthesizes an answer (with citations to source texts).

UI: Present answer + link to sources; include disclaimers and “ask doctor” follow-ups.

Real-World Applications: Hospital knowledge bases for doctors, patient symptom checkers (non-diagnostic), medical research assistants summarizing papers.

Bonus Upgrade Ideas: Enable citations (e.g. footnote journal names), fine-tune the LLM on medical Q&A, add symptom checker flows, or connect to wearable data (heart rate, etc.) for personalized tips.

4. LearnBot — Personalized Tutoring Assistant

Want a study buddy? LearnBot lets students chat with an AI tutor that pulls answers from textbooks and notes. For example, if you ask “Explain Newton’s second law,” it can retrieve definitions or examples from science texts instead of guessing. This means the answers are accurate and domain-specific (RAG systems are known to give higher accuracy and up-to-date responses.

Tools & Technologies: LangChain, open educational resources (Khan Academy, Wikipedia), VectorDB (Chroma), GPT-4 or a fine-tuned open LLM, and a chat UI (Discord bot or Streamlit).

Step-by-Step Design Process:

Load Learning Material: Ingest textbooks, lecture notes, or Q&A sets.

Chunk & Embed: Split chapters into bite-sized chunks, embed them.

Indexing: Store vectors in the database with topic labels.

Query: Student asks a question.

Semantic Retrieval: Find relevant passages (e.g. from algebra or history text).

Teaching Response: LLM crafts an explanation, quiz, or example problem, using the retrieved content.

Feedback Loop: Allow student to ask follow-ups or rate clarity.

Real-World Applications: Online tutoring services, homework help chatbots, language learning assistants.

Bonus Upgrade Ideas: Add multi-turn tutoring (track student progress in memory), generate practice quizzes, incorporate voice (so it reads answers aloud), or connect to an exam-prep database.

5. NewsDigest — News Summarizer & Q&A

Too many news sources, too little time? NewsDigest scans the latest articles, then uses RAG to summarize or answer questions. For instance, it could pull quotes from multiple news outlets to answer “What’s happening with the global economy?” By combining retrieval with generative AI, it delivers contextually rich summaries (RAG is shown to improve tasks like summarization and question.

Tools & Technologies: News API or RSS scrapers, text splitters, LangChain/Arxiv-lingua (for multi-language summarization), VectorDB (FAISS/Pinecone), GPT or an open-source LLM (for summarizing), and a web dashboard.

Step-by-Step Design Process:

Ingest News: Collect headlines/articles from RSS feeds or APIs.

Preprocess: Filter by date/keyword, clean HTML, chunk long articles.

Embedding: Create vectors for each chunk.

Indexing: Store embeddings chronologically.

Query & Retrieval: When asked a topic, fetch top related chunks from recent articles.

Generate Summary: LLM writes a concise summary or bulleted list of key points.

UI: Show the digest with links to source articles, allow subscription by topic or email.

Real-World Applications: News aggregation sites, market intelligence reports, daily briefing emails.

Bonus Upgrade Ideas: Add sentiment analysis (positive/negative news), trend charts (using retrieved data), fact-checking against official sources, or multilingual support.

6. TripPlanner AI — Smart Travel Itinerary Generator

Wish your AI friend could plan your vacation? TripPlanner AI asks for preferences (beach, budget, dates) and scrapes travel sites, then uses RAG to compile a day-by-day itinerary. For example, it might pull hotel info and local events from up-to-date sources. This is perfect for travel planning because it can fetch real-time data (weather, flight status, etc.) instead of outdated info.

Tools & Technologies: Web scrapers (for airlines, hotels, reviews), Google Maps API, LangChain (for query handling), VectorDB (Qdrant), GPT-4o (for natural language planning), and a React or mobile UI.

Step-by-Step Design Process:

Data Collection: Gather data on destinations (photos, attractions, transport) from TripAdvisor, Wikipedia, etc.

Preprocessing: Geotag information, chunk by location or theme.

Embeddings: Generate vectors for attractions, tips, reviews.

Index: Store vectors with geodata.

Query: User inputs “3-day London itinerary for families.”

Retrieval: Pull relevant descriptions (museums, parks, restaurants).

Answer Generation: LLM organizes them into a schedule with explanations. 8. UI: Display itinerary with maps and booking links.

Real-World Applications: Travel agency chatbots, vacation planning apps, voice assistants (e.g. Alexa skill).

Bonus Upgrade Ideas: Add integration with booking engines (flights, hotels), user ratings to refine suggestions, dynamic adjustment (if you stay longer, recompute), or AR features (point your camera and ask what’s nearby).

7. ShopAdvisor — E-Commerce Customer Assistant

Turn your product manuals and FAQ into a smart shopping assistant. ShopAdvisor lets customers ask questions like “Does this phone case fit the iPhone 14?” It then retrieves answers from product specs and reviews. In customer service, RAG pulls real product information and customer history to give tailored answers— much better than generic chatbot replies.

Tools & Technologies: VectorDB (Weaviate or Pinecone), LangChain (RetrieverQA chain), product catalog data (CSV or Shopify API), GPT-4o (LLM), and a web or chat interface (Zendesk/WhatsApp).

Step-by-Step Design Process:

Import Product Data: Load descriptions, manuals, spec sheets.

Text Splitting: Break specs/reviews into chunks.

Embedding: Create embeddings and index them.

Query: Customer asks a question about a product.

Search: Retrieve relevant chunks (images, text).

Answer & Explain: LLM composes the answer and can even quote the manual.

UI: Show answer plus links to product pages, let user “click to buy.”

Real-World Applications: Retail chatbots, automated FAQ pages, after-sales support (e.g. troubleshooting devices).

Bonus Upgrade Ideas: Add voice support (call center), translate Q&As for global customers, integrate customer account data for personalization, or upsell related products.

8. JobMate — AI Resume & Interview Coach

Get hired faster with an AI career coach. JobMate ingests job descriptions and career advice articles. When you ask “How do I tailor my resume for a data scientist role?”, it retrieves relevant tips (skills, keywords) and even drafts bullet points. It can also simulate interview questions by finding common ones for your field.

Tools & Technologies: Scraped data from Indeed/LinkedIn (job posts), StackOverflow (for technical Q&A), LangChain, FAISS, GPT (or an open interview-specific LLM), and a simple web app.

Step-by-Step Design Process:

Data Gathering: Collect sample job ads and successful resumes.

Preprocessing: Extract responsibilities and required skills.

Embedding: Vectorize job requirements and resume tips.

Indexing: Store embeddings.

Query: User inputs their profile and target role.

Retrieval: Find matching skills and keywords.

Generate: LLM suggests resume edits or common interview questions.

UI: Let user refine answers, export resume.

Real-World Applications: University career centers, job search platforms, talent coaching services.

Bonus Upgrade Ideas: Add live practice interviews (speech-to-text), connect to LinkedIn to auto-fill info, incorporate salary trends, or use reinforcement learning to rank the best resume phrasing.

9. BrainyBinder — Personal Knowledge Base

Build your own “Second Brain.” BrainyBinder takes your notes, PDFs, and bookmarks, then lets you query your personal archive. For example, you could ask, “What did I learn about neural networks in Q1?” and it will fetch answers from your saved docs. The AI essentially becomes your memorial librarian, combining all sources so it never forgets.

Tools & Technologies: LangChain or LlamaIndex (for various data loaders: Git, Google Docs, Markdown), a local vector store (Chroma or Qdrant), GPT-4o (LLM), and an Electron or web interface.

Step-by-Step Design Process:

Ingest Personal Files: Link Google Drive, Notion, or local folders.

Chunk & Embed: Process each document/note, generate embeddings.

Index: Keep a unified knowledge graph of all topics.

Query: User asks about something (project details, a past lecture, etc.).

Retrieve: Find the best-matching notes or emails.

Answer: LLM synthesizes a coherent summary or answer.

UI: Display answer with links to original notes; allow tagging or rating.

Real-World Applications: Researchers managing literature, students organizing study materials, professionals keeping track of meetings/ideas.

Bonus Upgrade Ideas: Semantic tagging and filtering (date, project), mobile sync (search on phone), proactive reminders (“You haven’t looked at this file in a month — summary?”), or multi-agent setup (one agent for each domain of knowledge).

10. ChefAI — Cooking & Recipe Assistant

Never wonder what’s for dinner again! ChefAI can chat about recipes and cooking tips. You point it to your favorite cookbooks or food blogs; then ask “What can I make with spinach and chickpeas?” It retrieves matching recipes and even suggests tweaks (gluten-free substitutes, spice levels).

Tools & Technologies: Recipes dataset (Kaggle or scraped sites), OpenAI embeddings or Sentence Transformers, LangChain (for QA chains), GPT-4o or a multilingual LLM (cooking terms), and a UI (mobile app or website).

Step-by-Step Design Process:

Collect Recipes: Scrape recipe sites or import a recipe book (structured with ingredients/instructions).

Preprocess: Normalize ingredients, split steps into sentences.

Embedding: Vectorize each ingredient list or step.

Index: Store in FAISS.

Query: User lists available ingredients or dish ideas.

Retrieve: Find similar recipes.

Generate: LLM suggests a recipe or adapts one (“Add more garlic,” etc.).

UI: Show recipe, nutritional info, and allow adjustments (servings, diet).

Real-World Applications: Smart kitchen assistants, diet planning apps, cooking chatbots for restaurants.

Bonus Upgrade Ideas: Integrate with voice assistants (Alexa, Google Home), add pantry tracking (reminds what you have left), generate shopping lists, or convert measurements automatically.

Each of these projects demonstrates how to combine an LLM with a retrieval system to create smarter AI apps. By grounding the model in real data (via RAG), you make it more useful and trustworthy. Pick one (or two!) that excites you, and start building — your portfolio will be proof that you can make AI that isn’t just clever, but also practical and fun for 2025–26.

If you are into Data Science, AI/ML and AI Engineering field. Don’t hesitate to build different types of projects. Always try to read and make projects. Work on fresh ideas. My funda is clear: Learn-> Build-> Show-> Get hired.

Thank you for reading this. Follow me here and on my socials for more such posts.

Follow me on socials:

Twitter: https://x.com/techwith_ram

Instagram:https://www.instagram.com/techwith.ram/