How Do AI Chips Work? (Neural Engines)

Running a complex neural network on your phone should have been impossible. After all, these models require millions (or even billions) of calculations, and doing that on a traditional CPU would be painfully slow and drain your battery in minutes.

So how is it that your smartphone today can run AI tasks like real-time photo enhancement, voice recognition, or AR effects so effortlessly?

The answer lies in a new category of processors: Neural Processing Units (NPUs), or what Apple calls the Neural Engine.

The Problem With Old Chips

Neural networks are powered by math; specifically, endless streams of matrix multiplications. Unfortunately, this math was a terrible fit for traditional processors.

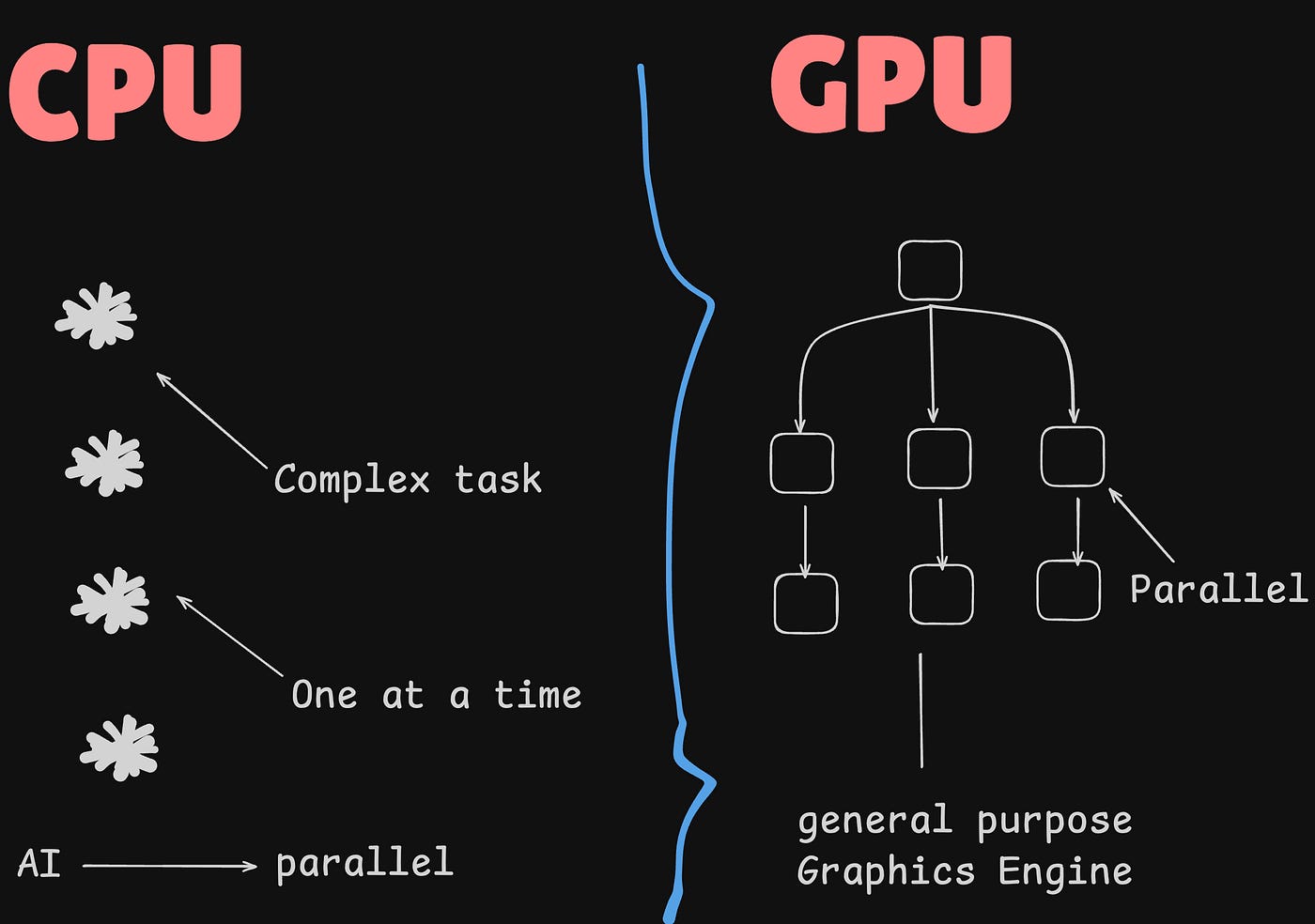

➤ CPUs excel at complex, one-at-a-time tasks. Perfect for logic-heavy programs, terrible for repetitive math.

➤ GPUs improved things with parallelism, but they’re still built as general-purpose graphics engines, carrying overhead not needed for AI.

Both were good enough for early AI experiments, but they were never designed for the kind of scale modern neural networks demand.

The Rise of the Neural Processing Unit

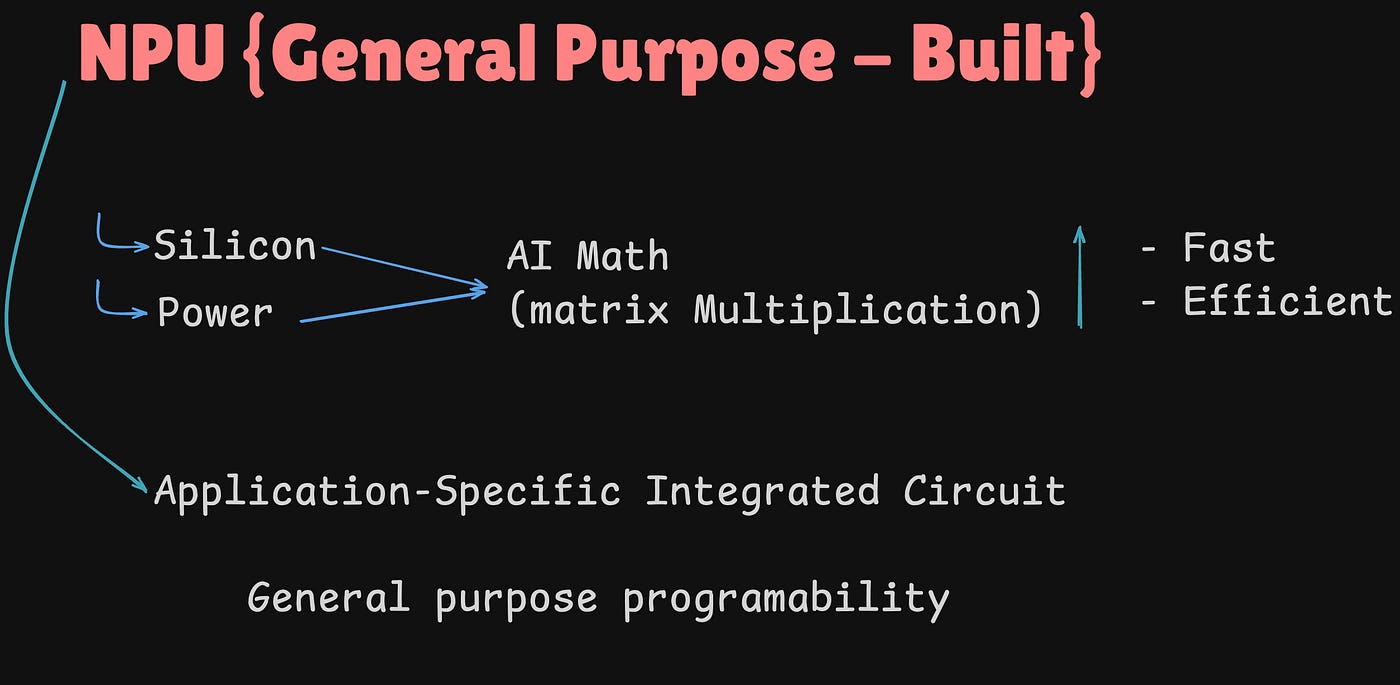

Enter the NPU, a third pillar of computing. Unlike CPUs and GPUs, NPUs shed general-purpose design and focus entirely on one job: running neural networks as efficiently as possible.

How?

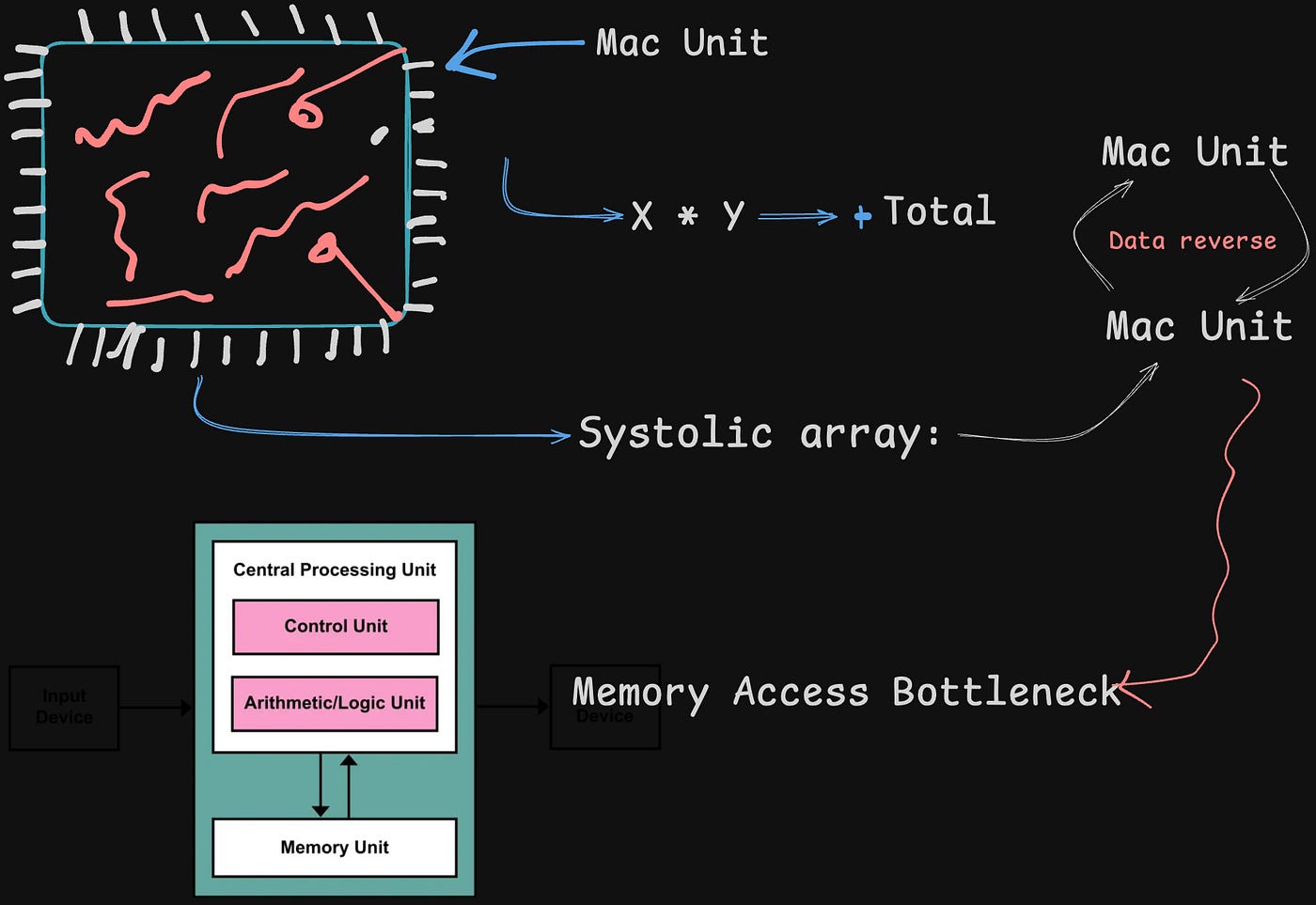

➤ Dedicated hardware (MAC units): The chip is packed with thousands of tiny circuits whose sole job is to multiply two numbers and add them to a total. This is the essence of matrix multiplication.

➤ Systolic arrays: Instead of constantly pulling data from slow memory, systolic arrays pipeline data through the chip in rhythmic flows. This reuses data efficiently and avoids the memory bottleneck that plagues CPUs and GPUs.

➤ Low-precision arithmetic: Neural networks don’t need full 32-bit precision. By using formats like FP16 (16-bit floating point), NPUs double performance and halve memory use with almost no impact on accuracy.

Put simply, NPUs are factories for matrix math, and that makes them game-changers for AI.

Apple’s Secret Advantage

The most famous NPU today is the Apple Neural Engine (ANE). But what makes it special isn’t just raw power; it’s how deeply it’s integrated into Apple Silicon.

Apple designed the ANE as part of a tightly integrated SoC (System on Chip), where every component works together. Here’s why that matters:

➤ Workload offloading: By pushing AI inference to the ANE, the CPU and GPU are freed up for other system tasks. This prevents stutters and saves battery.

➤ Unified memory architecture (UMA): Instead of separate memory pools for CPU and GPU (like traditional PCs), Apple uses one high-bandwidth memory pool accessible by all processors. No data copying, no wasted energy.

➤ System-level cache (SLC): Frequently used data is kept close to all processors, avoiding expensive trips to DRAM.

This vertical integration means Apple devices don’t just perform well in benchmarks; they feel fast and efficient in real-world use.

Beyond TOPS: Real-World AI Performance

You’ll often hear chipmakers brag about “TOPS” (tera-operations per second) when marketing NPUs. While impressive, raw numbers don’t tell the full story.

The true performance of the Apple Neural Engine comes from its system-level design, the synergy of unified memory, shared cache, and workload balance across CPU, GPU, and NPU. It’s not just about how many operations per second the chip can perform, but how smoothly those operations integrate with the entire system.

Final Thoughts

The Neural Engine is more than just another chip; it represents a new pillar of computing. Alongside CPUs and GPUs, NPUs are now essential for the AI-powered experiences we take for granted every day.

Apple’s approach highlights an important truth: raw performance numbers don’t tell the whole story. Real breakthroughs come from system-level thinking, where hardware and software are designed hand-in-hand.

Without NPUs, AI on your phone would still be impractical. With them, it’s invisibly and seamlessly enhancing your photos, transcribing your voice, and powering intelligent features in the background.

The NPU turned the “impossible” into the everyday. And it’s only the beginning.

Thank you for reading this. Follow me on socials for more updates, behind-the-scenes work, and personal insights: