CPU vs. GPU in Distributed Computing: Which One Should You Choose?

As datasets grow and AI models become more complex, traditional computing methods struggle to keep up. Distributed computing enables scalable workloads, but choosing between CPU-based and GPU-based processing is key to optimizing performance.

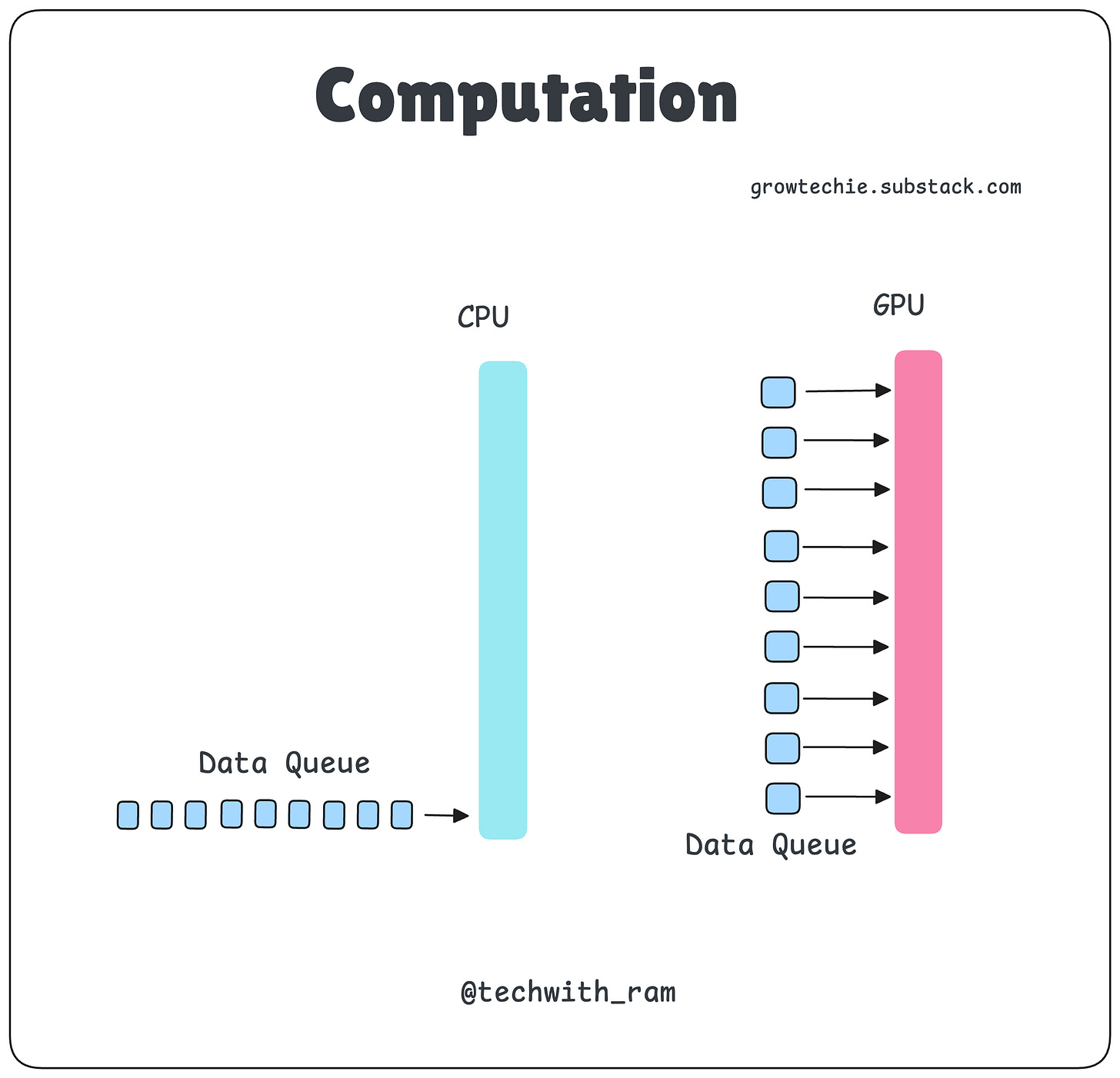

1. Understanding CPU vs. GPU Computing

FeatureCPU (Central Processing Unit)GPU (Graphics Processing Unit)ArchitectureFewer cores (4-64), optimized for sequential tasksThousands of cores, designed for parallel processingWorkload TypeBest for single-threaded tasks & logic-heavy operationsExcels in parallel computations & deep learningMemory AccessLarge cache, optimized for fast memory accessHigh memory bandwidth, suited for batch processingUse CasesData preprocessing, databases, real-time applicationsNeural networks, numerical simulations, AI/ML workloads

2. When to Use CPU vs. GPU in Distributed Computing

Use CPUs for:

Data preprocessing & ETL workflows

Sequential and logic-heavy computations

Low-latency, real-time applications

Use GPUs for:

Training deep learning models

Large-scale simulations and AI workloads

High-performance computing tasks

3. Hybrid Approach: CPU + GPU for Maximum Efficiency

Many modern distributed computing architectures combine CPUs and GPUs to maximize performance:

CPUs handle complex logic, control flow, and sequential tasks

GPUs accelerate intensive computations, making AI/ML training faster

Final Takeaway:

The right choice depends on your workload and scalability needs. For AI, ML, and high-performance computing, GPUs are essential, while CPUs remain critical for structured computations.

Follow me on socials:

Twitter: @techwith_ram

Linkedin: @ramakrushnamohapatra